Introduction

AI (AI), along with Machine Learning (ML), is revolutionizing the business world by automating complicated processes, recognizing trends, and providing valuable insight.

However, one of the major problems companies face is understanding the way AI models come to their conclusions. This is when the concept of Explainable AI (XAI) is introduced so that AI-driven choices are clear, easy to comprehend, and trustworthy.

What is Explainable AI?

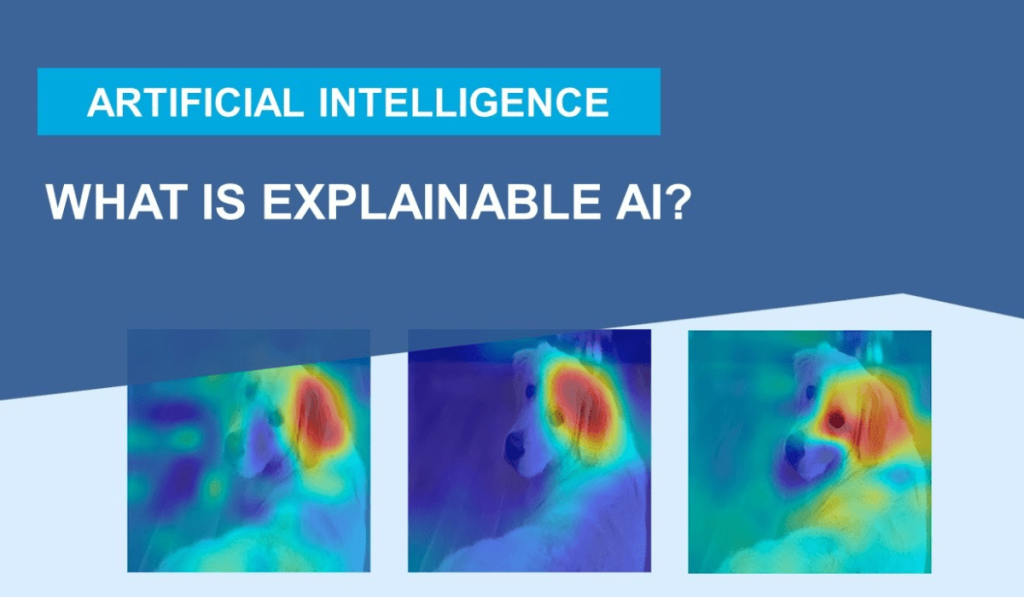

Explainable AI is the term used to describe techniques and methods that make the outputs from machine learning models understandable to humans. Contrary to the traditional “black-box” AI, where the decisions are made without discernible reasoning, XAI offers details of how and why the precise predictions or decisions were taken.

This transparency is vital for businesses that must be accountable, reliable, and transparent, which includes healthcare, finance, and the legal industry.

The Importance of Explainable AI

Transparency in AI systems can help businesses spot mistakes, minimize bias, and ensure compliance with legal requirements. For instance, in the banking sector, AI can use XAI to explain the reasons why a loan request was accepted or denied, ensuring accountability and fairness remain intact.

In healthcare, explainable models provide clarity in diagnoses, enabling doctors to trust AI suggestions when making crucial decisions. Furthermore, regulations such as GDPR demand that AI systems give detailed explanations, making XAI not merely a best practice but also a legal requirement.

How Explainable AI Works ?

Explainable AI uses methods such as feature importance, decision trees, and Local Interpretable Model-Agnostic Explanations (LIME) to help users understand the logic of AI models. These techniques allow stakeholders to identify which factors influenced an output and evaluate the accuracy of predictions.

XAI also assists developers in refining algorithms by detecting irregular patterns or unexpected biases in the data, leading to more reliable and ethical AI systems.

Conclusion

As AI becomes integrated into daily business operations, Explainable AI ensures trust, transparency, and accountability. By making machine learning decisions more understandable, companies can adopt AI responsibly, improve decision-making, and strengthen trust among stakeholders.

The application of XAI is not just a technical necessity—it is a strategic requirement for businesses that want to leverage AI ethically and effectively.